Synthetic identities: addressing the silent epidemic

Table of Contents

Knowing that your very identity is being transferred right now across the dark web is highly discomforting. Unfortunately, it’s also highly likely. The fraudulent use of passport photos, addresses and phone numbers has been around for a long time, and in the public eye, but the newer incarnation – synthetic identity fraud – is a different ballgame that’s highly unreported, impacting banks’ anti-fincrime controls and the lives of their customers.

Given its dangers, why does it remain under-the-radar to both financial institutions and customers that face the true monetary and societal repercussions?

Technological advancement has meant these methods can proliferate fast and in the underground while regulations keep up, and it’s still a race where criminals are winning. In light of a proposed International Identity Day, championing for greater visibility and action to protect citizen’s human rights to identity privacy, we see how AI-powered anti-money laundering (AML) solutions can fight back, if the financial world is committed to embracing them.

What are synthetic identities?

Traditional identity theft is an act we are familiar with, either through harsh personal experience or in its cinematic portrayals (The Talented Mr. Ripley as an extreme old-school example, or Punch-Drunk Love for an over-the-phone version). It entails fraudsters obtaining a license or a Social Security Number to mimic a real person and potentially drain their finances or exploit them in some way.

Synthetic Identities though, true to their name, are fabricated. Also dubbed ‘Frankenstein’ entities, the ‘manufacturing’ process’ involves a whole new entity being magicked out of thin air using a combination of real and false information. In other more easily detectable cases, one part of the identity may include genuine personally identifiable information (PII) like a valid home address, while other details are altered slightly.

Either way, criminals may use these synthetic identities to open accounts for a while to improve credit ratings, to then max them out and disappear for good. It’s incredibly hard for a lender to discredit synthetic identities based on how well a fraudster can cultivate an image of legitimacy, and there’s no chance for an actual consumer to alert fraudulent behaviour if they technically do not exist.

The environment for the threat to grow

According to the Deloitte Centre for Financial Services, synthetic identity fraud is expected to generate $23 billion in losses for the industry by 2030, while Experian has found that only a quarter of 500 financial services companies are confident in addressing this particular issue.

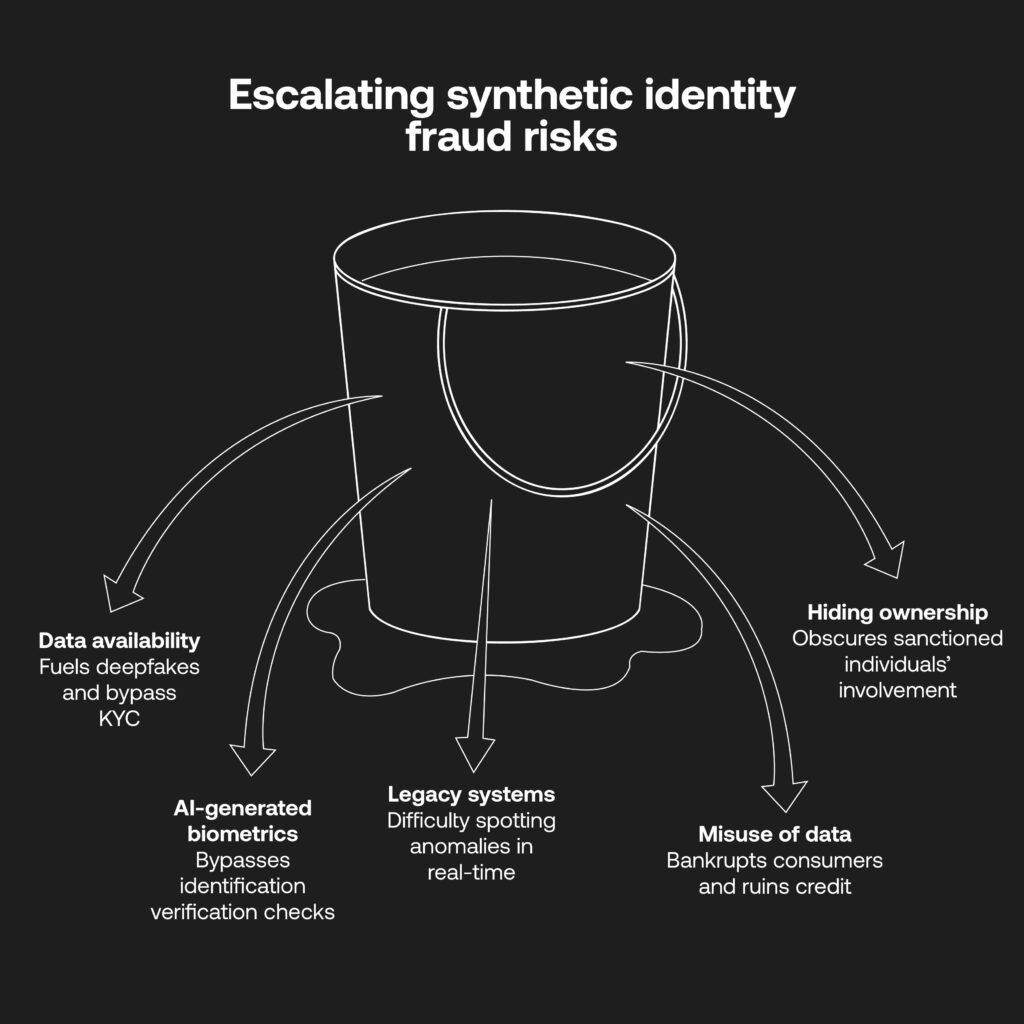

Given the sector is still catching up to this risk, those numbers will be exacerbated further when criminals turn up the heat. The availability of data on the dark web through hacking and data leaks is high, operating in areas unbeknownst to most other than specialist digital crime agencies.

Deepfaking is possible using this stolen data, and AI-generated biometric information can bypass identification checks at the integral know your customer (KYC) stage to open an account at a bank, fintech or lender. In advanced uses, criminals can also ‘inject’ fake information into the very identification verification technology itself. Legacy rules-based systems that have difficulty spotting anomalies at all, let alone in real time, do not stand a chance.

The misuse of personal data can bankrupt consumers, ruin their credit scores and lending capabilities, and these acts make even strengthened digital onboarding processes at financial institutions (FIs) vulnerable, not just per customer, but for business relationships. Synthetic Identities have been leveraged to hide the beneficial ownership information of sanctioned individuals to remove evidence of their stake or involvement, increasing the risks involved with third-party vendors or partners.

How AI & automation must adapt

With effectively ‘ghost’ entities operating constantly, FIs cannot rely on a defrauded customer coming forward to be compensated; their AML strategies have to be adapted to detect and prevent these sophisticated methods now, as they’re already contributing to diminished integrity in the financial system. Faulty banking AML systems ruin reputations and cost millions, or more, in fines. The expenses to install quality digital identity verification (IDV) software can trickle down to customers, upping their fees and slowing their account-opening experiences.

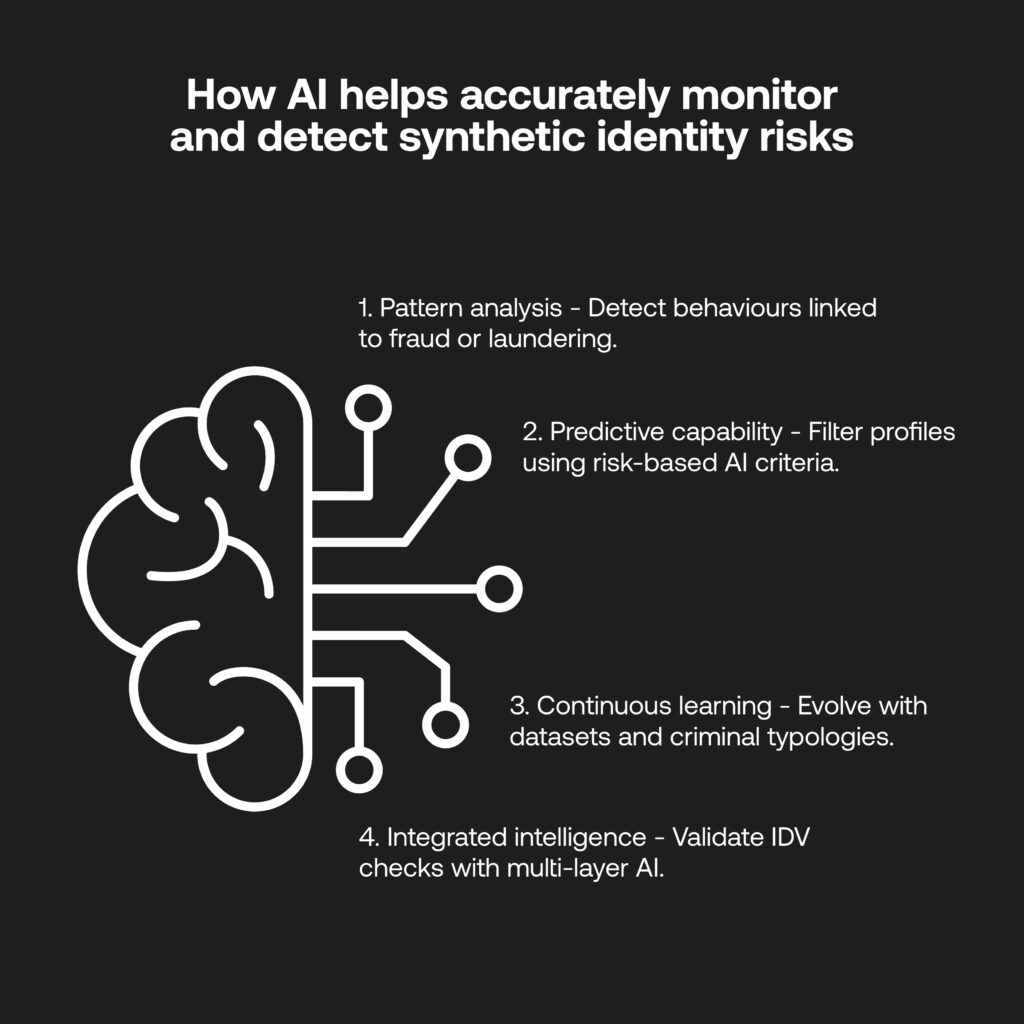

Essentially, FIs must have the keys to identify fake or genuine identities and transactions in less than a second. That’s a tough ask, but the ‘good side’ of artificial intelligence allows for significantly more accurate monitoring and detection rates of synthetic identity risks:

- Pattern analysis: across vast, complex and diverse datasets, it’s possible for AI to identify any anomalous behaviours that may be indicative of fraudulent or laundering techniques.

- Predictive capability: following a compliance team’s pre-determined risk criteria for individuals or businesses, AI is able to filter potentially threatening profiles for further investigation.

- Continuous learning: with reinforced AI usage against historical datasets and criminal typologies, the technology can preempt evolving risk and only leverage high-risk entities to let legitimate customers transact and onboard efficiently.

- Integrated intelligence: through a combined AML system, IDV checks can be validated through multi-tier AI-driven authentication.

Of course AI technology is not a panacea to solve synthetic identity fraud. But it is a facilitator for advanced IDV adoption, which can be tuned to organisational risk appetite by compliance professionals to dramatically increase detection rates.

Proactive steps for banks

With AI-based tools offered by, and supported by, compliance platforms, IDV will become more adept in knowing the common pitfalls utilised by synthetic identity fraudsters. It can help the ecosystem stay one step ahead of whatever they can pull from their box of tricks next, so long as RegTech partnerships are adopted, governmental action is taken, and knowledge and experience surrounding the newfound threats are shared to achieve this.

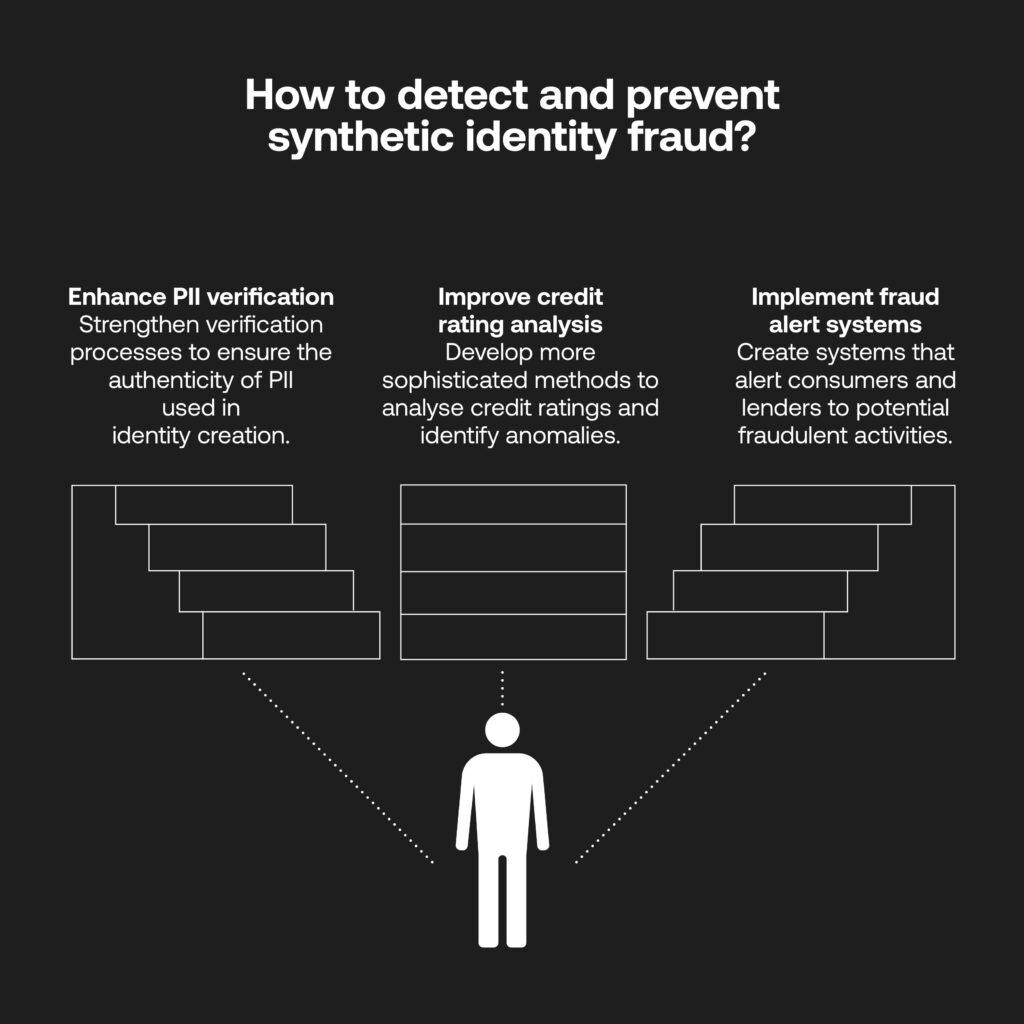

As a start, platforms need to offer layered IDV: document validation and data enrichment against trusted government-approved sources; multi-factor authentication; facial recognition technology; and liveness checks. At the first stage of onboarding, this sets a precedent for safe customer experiences through the AML process, where risk models attuned to synthetic-specific risk can work around the clock to monitor transactions for fraudulent behaviour and raise alerts for enhanced due diligence.

Just as AI-driven monitoring is continuous in identifying risk factors, Perpetual KYC (pKYC) will become an integral part of post-account creation. Initial checks and periodic reviews are no longer enough for strict regulatory protocols, where pKYC allows for customer risk profiles to be updated in real-time following any changes identified by an AML system and ensuring data is up to date.

The onus is not only on FIs, but regulators themselves and global agencies to advocate for synthetic identity awareness. There are already great strides here with the call for September 16 to be recognised as International Identity Day, aligning with a UN initiative to recognise legal identity as a fundamental human right by 2030.

Support has been shown from pan-continental organisations (ID4Africa), the World Bank Group, the Biometric Institute, humanitarian charities such as Unicef, and financial giants Visa and Mastercard, among others. Data privacy concerns have fast become law, and going further will ensure responsibility surrounding identity online and offline.

An end to silence is vital

The unfair use of personal data is a direct and harmful practice not being taken seriously enough. Before it gets worse, there’s an urgency to recognise warning signs and adopt risk-based protocols into an AI-driven compliance platform that can do a world of good, not just to stave off the threat of a regulatory fine, but to protect the names, lifestyles and rights of customers all around the globe. Synthetic identity fraud is another pointed reminder of KYC and AML as a crucial safeguard and not a checklist afterthought – the time to act is now.

For more insight into identity verification, pKYC, and the emerging threat of deepfakes, talk to the RelyComply team today, or delve into our resources on the power of advanced RegTech to combat the digital frontier of financial crime.

Disclaimer

This article is intended for educational purposes and reflects information correct at the time of publishing, which is subject to change and cannot guarantee accurate, timely or reliable information for use in future cases.