The complex fraud lifecycle and rise of re-targeting scams

It’s naive to believe in the phrase “fooled once, never fooled twice” when it comes to the modern fraud lifecycle – where fraudsters consciously target the same scam victims time and time again, using advanced technology to feed their salacious appetites.

Particularly on digital channels, the world is experiencing a growing phenomenon of victim recycling, seeing initial victims of scams groomed as money mules, or pulled into a long-winded fraud cycle that’s tricky to detect. This showcases the dark side of data harvesting and sharing, and where integrated fraud and AML tools have to converge to release repeated victims from the stranglehold of this complex pattern of behaviour.

The hidden truth of fraud revictimisation

In RelyComply’s latest Laundered podcast, Gareth Dothie (Head of Bribery and Corruption at City of London Police) noted how even the UK’s mature economic market is overwhelmed by the concept of repeat exploitation. While scams have existed for centuries (since the first tax evaders in Ancient Egypt) and are known dangers for the general public, re-targeting scams within a few months of a ‘first bite’ are a distinctly harmful threat.

Scarily, they’re becoming routine. In the hands of highly functional international groups, the collection of one person’s data is never held by just one scam artist. Through data sharing and the ranging expertise of multiple fraudsters – social engineers to launderers and cryptotumblers etc. – syndicates exploit initial victims while they’re down. Once they’re identified as vulnerable weak links, they can be sent on to help criminals operating in other areas.

The commodification of victim recycling

Therefore, the collection of scam victim data is truly allowing the fraud lifecycle to scale up. Criminal networks squeeze huge value from fewer targets, and grow from the size of a startup to a global enterprise exceedingly quickly. Victims are essentially low-hanging fruit likely to give over more personal information and money from increased manipulation – showing how non-empathetic criminals conduct their nefarious means in a business-like manner.

Anyone can fall foul of having their data stolen, given how much we give over to healthcare providers, banks, social media accounts, airlines, e-commerce sites and more. Data breaches are usually conducted with financial gain in mind, where around 3.18 million fraud offences and 1.02 million computer misuses were recorded in the UK alone last year.

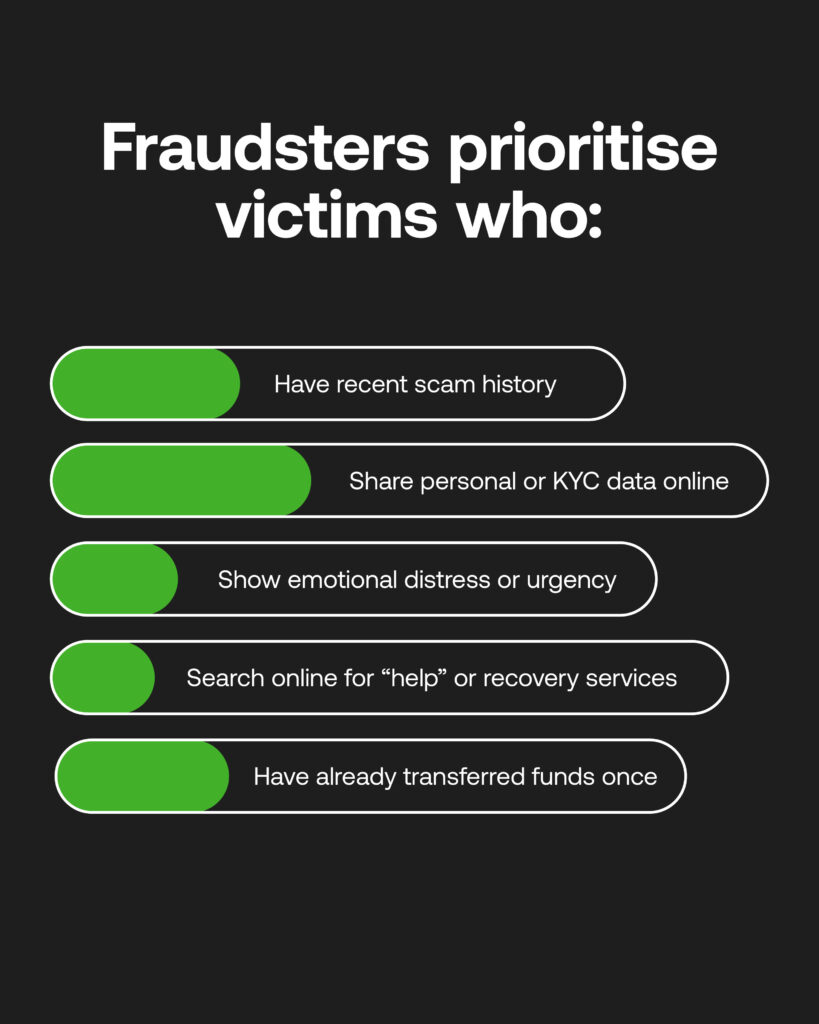

Initial victims may feel unlucky rather than targeted, or in denial about their poor personal data security measures, such as protecting or updating passwords. Others will be filled with huge emotional distress, sometimes thinking they have an obligation to appease their attackers. With addresses, bank details and even locations garnered from online photographs, blackmail is inevitable for scam follow-up fraud to escalate.

Victim lists on the dark web

Armed with KYC information, the digital underworld becomes a playground for scammers to evade even the most vigilant anti-fincrime agencies, and where ‘scam traffic’ blows up. For instance, recency bias may see newly involved victims increase their digital footprint while looking for help online, to their detriment.

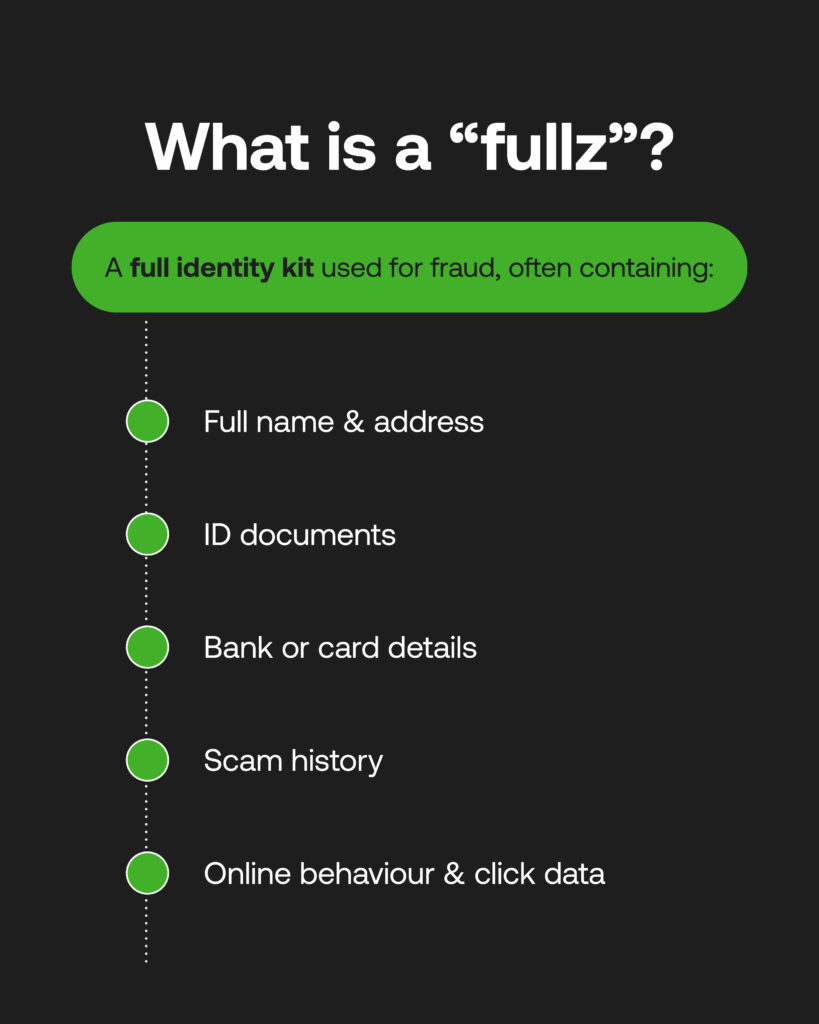

These fragmented GPS tags, contact details, IDs, scam history, and click habits all help criminals build victim profiles (full identity kits, or ‘fullz’) that get shared through the dark web for fraud revictimisation, via connected networks that are near-impossible to break away from. The scale of such operations is all trackable for organised groups that maintain databases of fullz – essentially a CRM-style tool fuelling the dark web marketplace, and run by gathered intelligence.

Taking a worldwide approach, attacks can come from repeated socially engineered calls and emails with multi-language scripts. Those first affected by romance scams become ample targets for investment scams, while those that succumb to phishing may be more inclined to respond to impersonation scams. The extent of accumulated data informs nurture sequences that are extremely tailored to the victim based on their interactions.

Recovery scams and money mule recruitment

The majority of the time, this repeated exploitation takes place using ‘recovery scams’. Criminals pose as legitimate entities (through increasingly smart AI-generated email templates) that could recuperate the losses from an original dupe. These fronts could be authoritative, helpful services such as police forces, fraud agencies, refund agents, cybersecurity firms or banks to achieve coercion.

The fraudsters’ use victims’ stolen KYC details against them, as they may have given such information to legitimate companies before, or be made to feel they’re assisting fraud investigations. Trust-building leads to additional funds being extracted with increasing voracity, pressuring the specific psychological vulnerabilities of each unwitting investor.

When shame, fear, and compromised data all combine and spiral, many victims can feel powerless to their demands and become money mules: utilised as vehicles to receive or move stolen funds in disparate and hard-to-trace transactions. Sometimes, muling may maintain the illusion that the criminal work is purely legitimate. Elsewhere, threats to leak victims’ ID documents and highly personal information are made, or to report them to authorities and known criminal participants.

Where fragmented data helps repeat victimisation fraud

The ‘siloisation’ of financial institutions has granted gaps for criminals to weave between. This does not just apply to separate systems for onboarding, due diligence, transaction monitoring, and reporting, but varying customer data and workflows used by fraud and AML teams, who have largely conducted their investigative work apart. Traditionally fraud teams would be the loggers of an initial scam event with no links to AML teams, who were tasked with identifying mule-like behaviour (without any context behind the offense and among swathes of reports).

This is odd considering the clear overlaps in repeat victimisation fraud red flags that financial institutions now have to detect early to prevent onset, ramped-up money mule recruitment, including:

- Multiple disputes or scam reports made from one person in a short period

- “Refund” or “Recovery fee” references

- Unusual behavioural spikes (and amounts) in transaction activity

- Accounts gaining large third-party deposits out of the blue

- Victims sending multiple transfers to high-risk areas and accounts

Some muling signs are more discrete, like the reluctance to divulge KYC information or being defensive around payment purposes. It’s tricky to understand to what extent customers may be pressured to act fast by criminals in external communications.

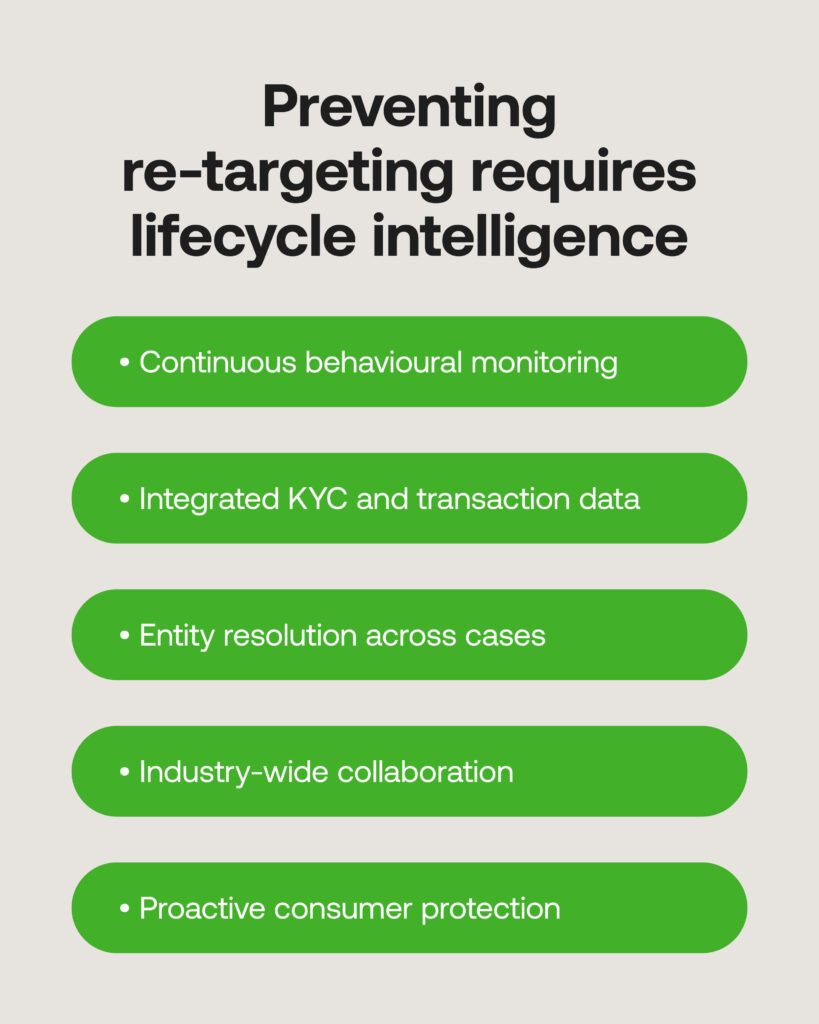

Ultimately, consumer protection is an utmost aspect of quality AML and fraud prevention, where connected solutions should be proactive to the risk, rather than acting once the victims are too heavily implicated in schemes, or have lost all of their hard-earned savings.

Integrated Protections for Vulnerable Customers

Manually spotting methods of victim recycling will not do much considering the rate with which fraud networks work. Instead, integrating continuously-updated KYC and transaction data with end-to-end detection is paramount to raise and act on suspicious signs, and determine the next stops for reporting cases of fraud right away:

- Behavioural monitoring and risk scoring

User-set risk alerts can be set for any keywords or payment references indicative of muling, while thresholds can cap unusual transaction amounts, or monitor for anomalous payment sequences and payments from high-risk industries and jurisdictions.

- Entity resolution

A consolidated source of truth can spot links between victim profiles across multiple instances, building an audit trail of potential repeat victimisation fraud for reporting purposes. Similarly, reused scam typologies can be linked to common actors, primed for further investigation.

- Rapid identification across the financial industry

When early warning triggers and smooth escalation protocols are enacted in AML systems at banks, fintechs, regulators and law enforcement, this provides evidential and sharable data which, through close-knit partnerships and digital takeup, strengthens safeguards for exploited members of the public.

If improvements get made per institution – teaming up with RegTech providers, and integrating their AML and fraud teams and systems – it’s a great start leading to a hugely improved anti-fraud whole, where consumers, fraud agencies and financial institutions trust each other as a solidified defence to these kinds of harmful fraud methods.

Lifecycle intelligence: Preventing re-targeting scams

Primary fraud attacks are hard enough on victims, and a whole fraud lifecycle can become damaging past repair. As this is fast becoming the usual scammers’ behaviour, fuelling all manner of money laundering and the heinous crimes it perpetuates, financial institutions have a moral duty to understand the threat and act quickly to battle it.

From seeing the importance of fraud prevention tools in the wider AML dialogue, to installing risk-based tools that look to prevent and protect further exploitation, criminal networks can be disrupted, and their feverish tenacity reduced by closing commonly infiltrated gaps. Fraud revictimisation is a slippery slope, and we all need to be wiser to its potential to push back effectively.