The future of AI-led compliance lies in human hands

If 2025’s main challenge involved driving as much value from IT investments as possible, this year marks another course-correction: we must prioritise human oversight for an actionable AI-led compliance environment to thrive.

Hard skills have been increasingly required of WebOps teams to build and accommodate such anti-money laundering systems, with considerations for perpetual maintenance and financial optimisation (FinOps), extra hires and education around cloud migration, and security-first development squads – as we deemed key areas for software innovation last year.

Further, these movements have grown alongside the inescapable narrative around AI adoption for regulatory purposes. But it’s not the existence of algorithmic AI itself that poses risks for financial institutions (FIs); it’s that our rapid ‘tech race’ could expose the widening talent gap within businesses, and between various players protecting the industry and their customers.

For industry-wide AI systems to remain a powerful, agile and valuable asset for compliance means, we need an integral human understanding of AI under ethical and interpretability concerns – where those that explore these essential ‘human-in-the-loop’ team approaches in 2026 can evolve AML to strengthen an uncertain anti-fincrime future.

Operational evolutions in AML

AI’s strong data processing power is a mainstream notion in AML: automations that can amplify productivity, underline monitoring for real-time detection and verification efforts, drive risk-based protocols, and how flexible cloud-based infrastructures can scale operations. These are a brilliant foundation, yet nullified without a compliance teams’ sign-off.

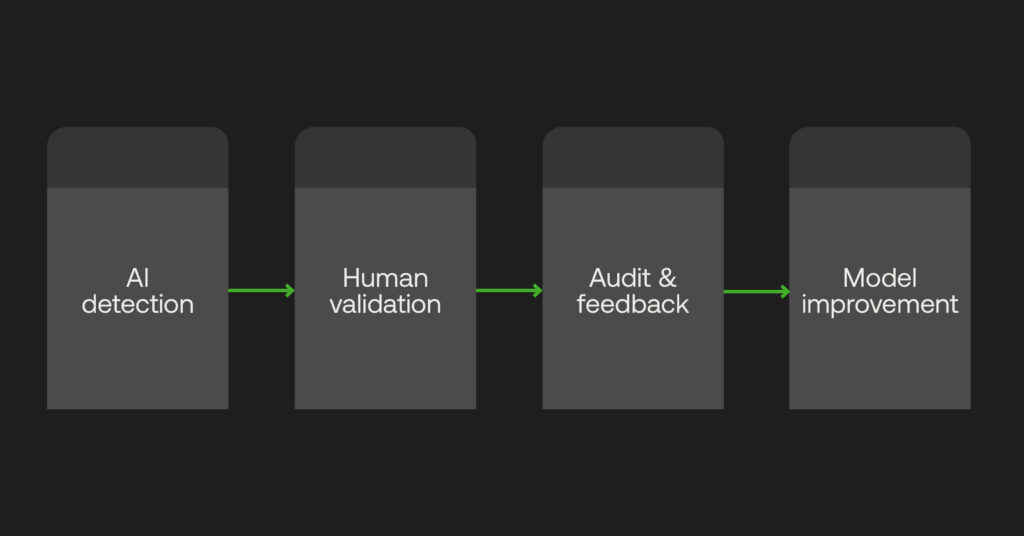

AI’s given outputs must be interpretable by professionals to determine whether workflow efficiency gains are backed up by the system’s accuracy in alerting fincrime risk. Where AI models play such a distinct role in transaction monitoring and reporting, tracking their decision-making in line with how they influence human compliance strategy is mandatory for supervisory audits, and evidence of cooperative platform and people interaction driving AML-in-practice.

Greater AI literacy

While the swift evolution of AI intelligence for advanced use cases is welcomed, it compounds the forecasted issue that FIs’ development teams require experienced data engineers well adept at utilising AI risk models for their specific jobs, and to upskill compliance teams that maintain AML systems and AI productivity tools every day.

Not only does this apply to specifically training AI models against large data sets (particularly to improve pattern recognition for, say, payment risk monitoring) but also challenging the outputs that the machines make. Compliance officers must be able to validate their investigative findings against how they’ve used AI to arrive there, and address any biases, or other errors where an algorithm may have misinterpreted its source data or prompts. This incentivised feedback loop helps to improve AI models over time.

Maintaining accountability

FIs house sensitive customer data, which provides motivations for cybercriminal threats that are quickly becoming more dangerous through biometric fraud and connected devices. As such, cybersecurity standards are more complex to keep criminals at bay, and to hold businesses accountable for their data governance.

Beyond the growing need to configure AML systems in line with certifications such as ISO 27001, human-led Development, Security, and Operations (DevSecOps) skills are required to prove AI-based platforms’ intrusion prevention and adherence to regional data privacy laws. When an over-reliance on technology may undermine compliance teams’ duty of care to protect customer data from criminal infiltration, and therefore trustworthiness, human intervention enables cybersecurity measures to be implemented then tested frequently.

Establishing transparency in AI-led compliance

Likewise, increasing AI regulatory concerns throw into question the fair use of high-risk technology. Obligations to identify how AI systems are designed are becoming mandatory in laws such as the EU’s AI Act, ensuring businesses document how data logs are collected, stored and interpreted. This boosts transparency with regulators and customer bases alike.

Particularly when developing risk models, black box algorithms offer no way to trace or understand their outcomes. Explainable AI (XAI) underlines AML systems to provide clarity around their actions, again supporting a human-in-the-loop approach whereby compliance teams can more easily justify automated decisions, mitigate bias or debug the under-performance of AI models.

Fostering cultural shifts

Now that the compliance function has changed face into a more technically-advanced outfit, this affects the makeup of an entire FI that has had to juggle the operational challenges of rebuilding IT departments for cloud computing capabilities, and hiring FinOps experts to drive AML return-on-investment. Systems, personnel, and ongoing training are all budget bugbears for finance officers, despite AI employed for streamlining workflows and minimising unnecessary overheads.

A management-level fix needs to be implemented to rectify this, where closer-knit technologists and compliance officers collaborate when purchasing, integrating, deploying and managing AI-based software in line with the business’ needs. Cross-referenced expertise and skill-building gets separated teams on the same page in terms of AI readiness for impactful AML compliance, proving it as a multi-use revenue generator to bolster the entire FIs’ tech-driven compliance culture.

AI will continually be seen as a regulatory hurdle to grapple with on top of dominating compliance requirements. However, the future of AML depends on it. Complementing these systems with human oversight is non-negotiable, bettering both our understanding and capabilities of AI-led compliance platforms, and preparing the compliance workforce through skilled AI governance – a desired asset at any innovative, fast-acting institution.