AI literacy, and preparing compliance teams in the algorithmic age

Artificial intelligence was once a characteristically alien concept reserved for the movies: HAL, I, Robot, and Skynet. Today, it’s becoming intrinsic to day-to-day compliance operations – or, at least, it needs to be. While it’s easy to get bogged down in the technical details of how models are trained and coded, what regulatory leaders actually need is AI literacy: a practical understanding of how AI works, where it adds value, and where its risks and limitations lie. Understanding more than AI’s basic use is now essential to effective, defensible compliance.

Luckily AI can be implemented in very specific cases for financial crime compliance, from perpetual KYC profile updates, and continuous transaction monitoring. And when institutions can arm their compliance leads with education around evolving models, data hygiene, biases, and generative limitations, this amplifies the effectiveness for AI within anti-money laundering protocols.

With that, it’s possible for the entire ecosystem to boost its expertise and AI literacy, narrowing the gap between machine-powered automation and the human accountability needed for nuanced, high-risk investigative work.

Challenging every machine decision

Outside of AI’s advanced capabilities and where its generative techniques are commonplace, there’s plenty of criticism regarding its quality. As we’ve grown attune to Large Language Models (LLMs) in our digital everyday, there’s differences between its output and that of a human creator, be it ‘unnatural’ phrasing or contextual blind spots. AI acts on its inputs, and lazy enactment will always produce questionable results.

But this concept still applies to more expert AI usage. When it’s implemented to raise alerts of potentially suspicious behaviours and actions, especially after being trained on accurate historical data, its surfaced outputs cannot be gospel. With some scrutiny, it’s possible to notice stark differences between an AI’s very literal deductions to make a decision and the intuitive notions of the compliance officer developed over years, perhaps decades, of anti-financial crime detective work.

Even with ethically implemented AI models, their ‘truth’ is only so when complemented by human understanding. Reviewing AI outputs ensures that compliance teams remain confident in analysing their automated AML protocols. They’re not simply passive system operators clicking buttons, but continuously ensuring that the data they feed AI training sets are correct, and that they can defend the work of their investigative tools.

AI governance today

This is why ‘black box’ algorithms are becoming an outdated AI format; being opaque in how they determine their results based on the data they learn from, there’s no way a compliance officer can corroborate the legitimacy of its given insights.

Explainable AI (XAI) is now a regulatory expectation over a luxury must-have for larger firms with budgets to spare. Utilising XAI as a base level will ultimately set up the compliance function better when developing their models, where an understanding of today’s AI governance is paramount to adhere to ever-strict AI-related laws. This involves:

- Documenting how their AI models are built, trained and validated against audited data.

- Understanding how machine learning models are able to evolve over time, through growing data volumes and cloud-based AML systems.

- Knowing how to interpret decision-making from AI-generated inputs with regard to contextual nuance and regional or sector-specific risk factors.

- Demonstrating where (and how) AI decisions are logged for regulatory supervision.

- Putting controls in place to prevent drift or bias, and showcasing ways compliance leads can identify these phenomena.

Clearly this involves having both hands-on experience with AI systems and documenting ‘best practice’. Not only does this provide a comprehensive manual for multiple teams that have to be adept in AI functionality for compliance work, but also a framework that keeps an organisation transparent about their ethical AI use, as well as compliant when mandatory audits become a regular occurrence.

Balancing AI and human accountability

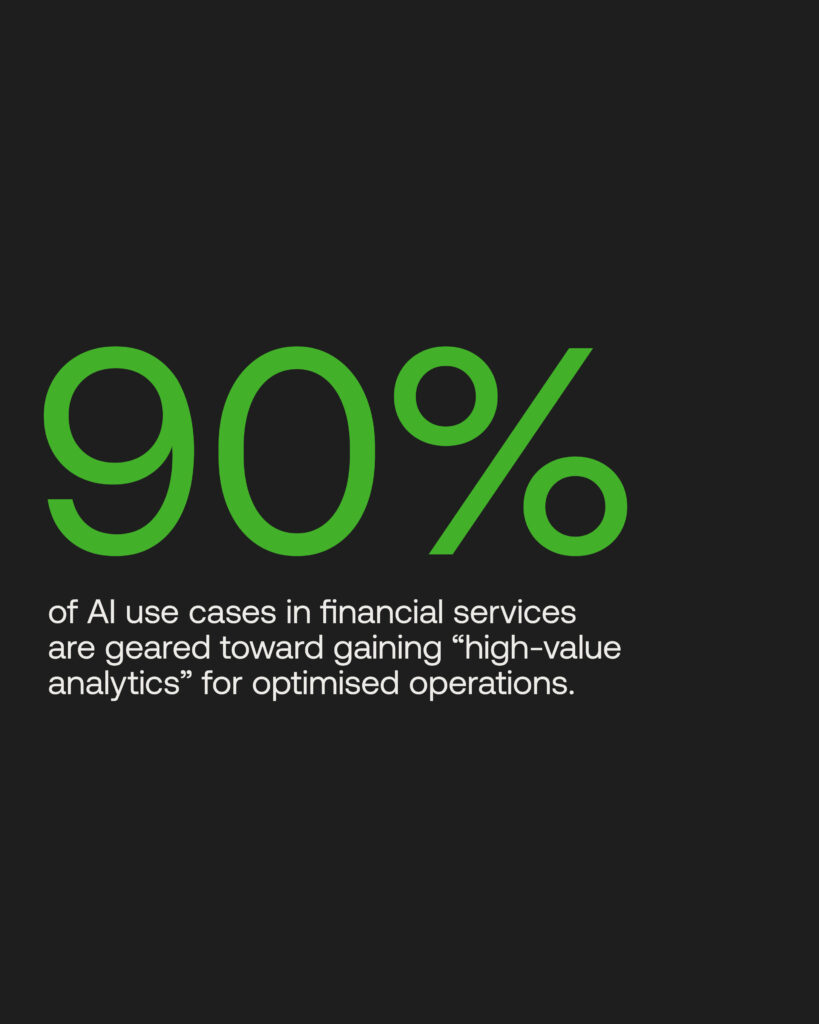

Regulatory leaders have been looking to AI in order to make their complex workflows that much more manageable; 90% of AI’s use cases in financial services are geared toward gaining “high-value analytics” for optimised operations. That’s completely fair, given the sheer volumes of customers and transactions on a daily basis compounding the difficulty to spot any wrongdoing, where any manual effort and costs spent on false positives and legitimate fund flow investigations being completely wasteful.

To do so effectively though, financial crime teams have no choice but to have ‘technologist’ as a CV item. This ensures automations make their own lives easier, including streamlining effective data alerts to reach their hands to turn evidence into prosecution. Accelerated workflows should not make them rest on their laurels; their own professional curiosity, moral judgement and commitment to customer and business-wide safety are all purely human notions underlying ethical AI.

This is why compliance professionals will always exist alongside AI-led AML systems. They help navigate the intersecting challenges of regulatory oversight, budget allocations, the impact on customers’ sensitive data and digital experiences (such as onboarding), while maintaining integrity for their brand to grow business. AI may do the legwork, yet the final call is in a compliance leader’s court.

AI literacy through cross-functional collaboration

Just as financial crime units are individual upskilling their AI knowledge and capability, the growing sophistication of the technology is having a dramatic effect on the makeup of a compliance team. Once seen as legal arms combing through complex regulatory reports, now compliance leads are proactive digital investigators on the frontline against laundering, and trusted figureheads for a digitally transformative financial industry.

With continued understanding for AI system adoption and implementation, the development of model accuracy and explainability can be fostered by diverse professionals with distinct skillsets. A hybrid function will, in an ideal world that 2026 looks to reach, consist of AI specialists, data engineers, and compliance leads working together will mutual understanding.

Through collaborative frameworks and ideas sharing, compliance experts can build on their own learnings into AI limitations and hallucinations, but also gain further visibility into effective model design. In the other direction, data scientists can grow their interpretation of global and local regulatory variations and changes, then utilise that knowledge to implement AI anti-fincrime controls that are fit for real-world purposes.

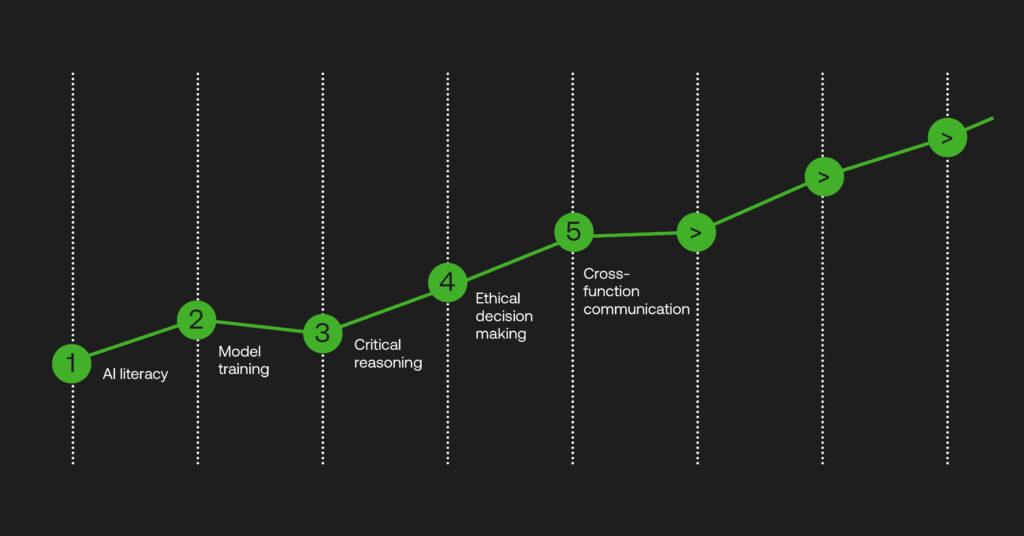

Developing a human/AI skillset step by step

- AI Literacy: Fully understanding what AI models do in regards to AML compliance, the historical data that they work off of, and how they reach decisions for enhanced investigative work.

- Model Training and Governance: Updating AML system, cleaning data, and identifying defects or repeated biases in AI models over time.

- Critical Reasoning: Challenging the assumptions of AI models against their risk control factors and human intellect.

- Ethical Decision Making: Prioritising XAI models that can transparently audit AI decisions from input to output, cross-referenced against necessary AI rules.

- Cross-Function Communication: Strengthening technical ability and regulatory considerations through business-wide training, internal and external learning sessions and multi-disciplinary compliance teams.

The effect of guided AI systems

AML is a complex beast that’s becoming a balanced act of technological speed, accuracy and scale in line with expert gut intuition from trained compliance professionals. AI fits into this mix well when it’s governed properly – emphasising the forever role of human intelligence.

AI should not be relegated as a tool simply cutting down a few time-sensitive jobs. When there’s very deliberate investments made in deploying AI for AML systems and creating a compliance function of multi-disciplinary experts, AI-led model management has a stratospheric effect on risk-based investigations, from the initial due diligence stages to swift reporting to regulators, government agencies, and ultimately law enforcement.

Compliance culture is changing for the better, and the industry is increasingly seeing how automated AML provides a competitive advantage in limiting harm in the form of ill-compliance, data breaches, and reputational damage. Extending beyond that desire to hone AI for actionable AML is key, when regulatory-minded humans that understand their systems’ data skills and power will shape the future compliance function, strengthening the tussle against criminal enterprises.